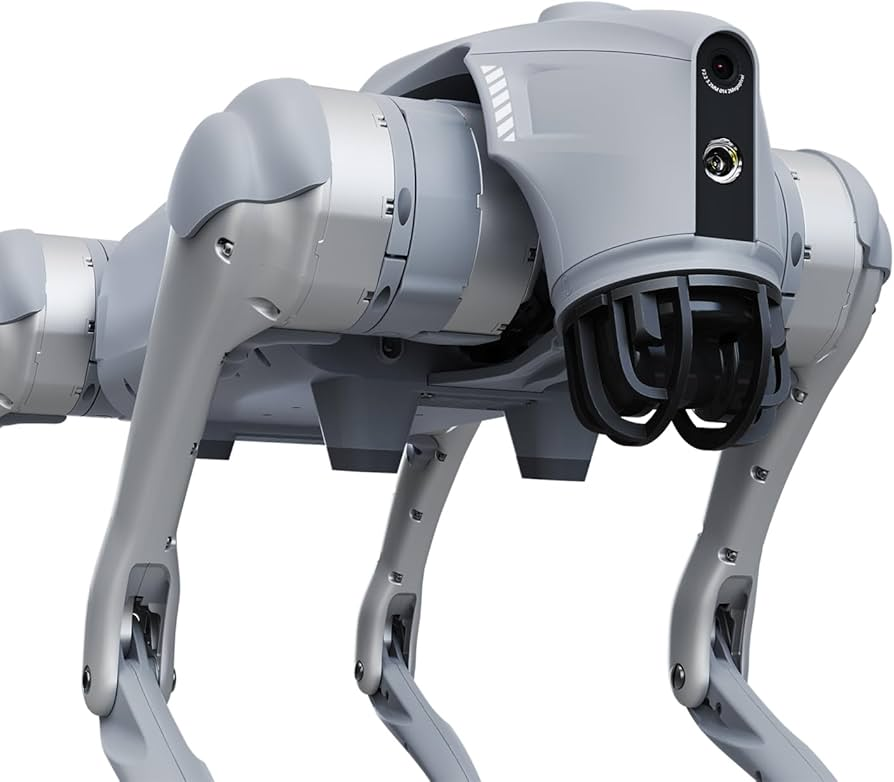

Quadruped Locomotion Through Mud (WIP)

Characterization and modeling of non-Newtonian fluid in Isaac, then training a policy to adapt to varying concentrations of mud. Policy is imported onto a Unitree GO2 robot for testing in deep mud. Target speed is 10 body lengths per minute while maintaining stability.

Skills: NVIDIA Isaac, MuJoCo, Reinforcement Learning, QuadSDK, Python, ROS2

Currently implementing mud physics into Physx, which includes modeling the suction, resistive, and shear forces acting on the quadruped leg.

Using Webots to Design FSD for a Tesla Model 3

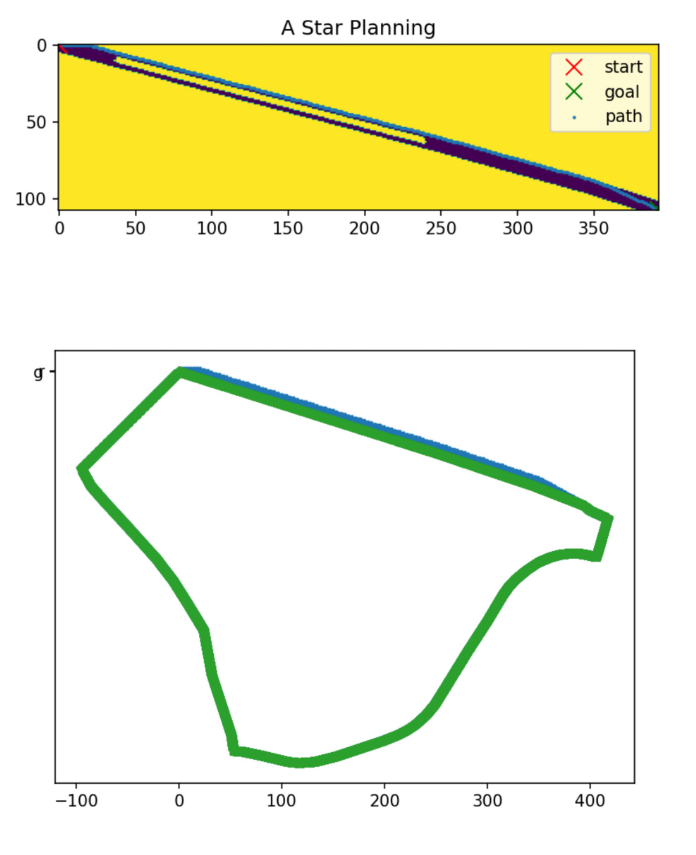

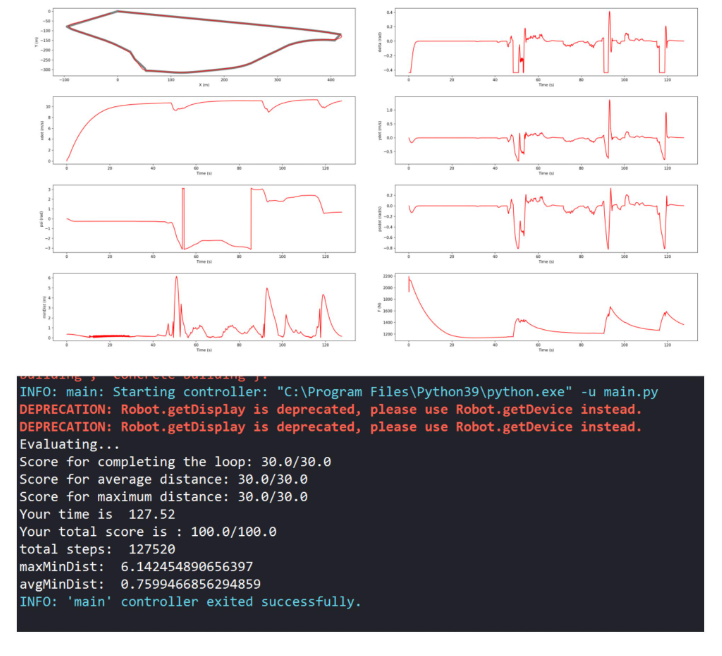

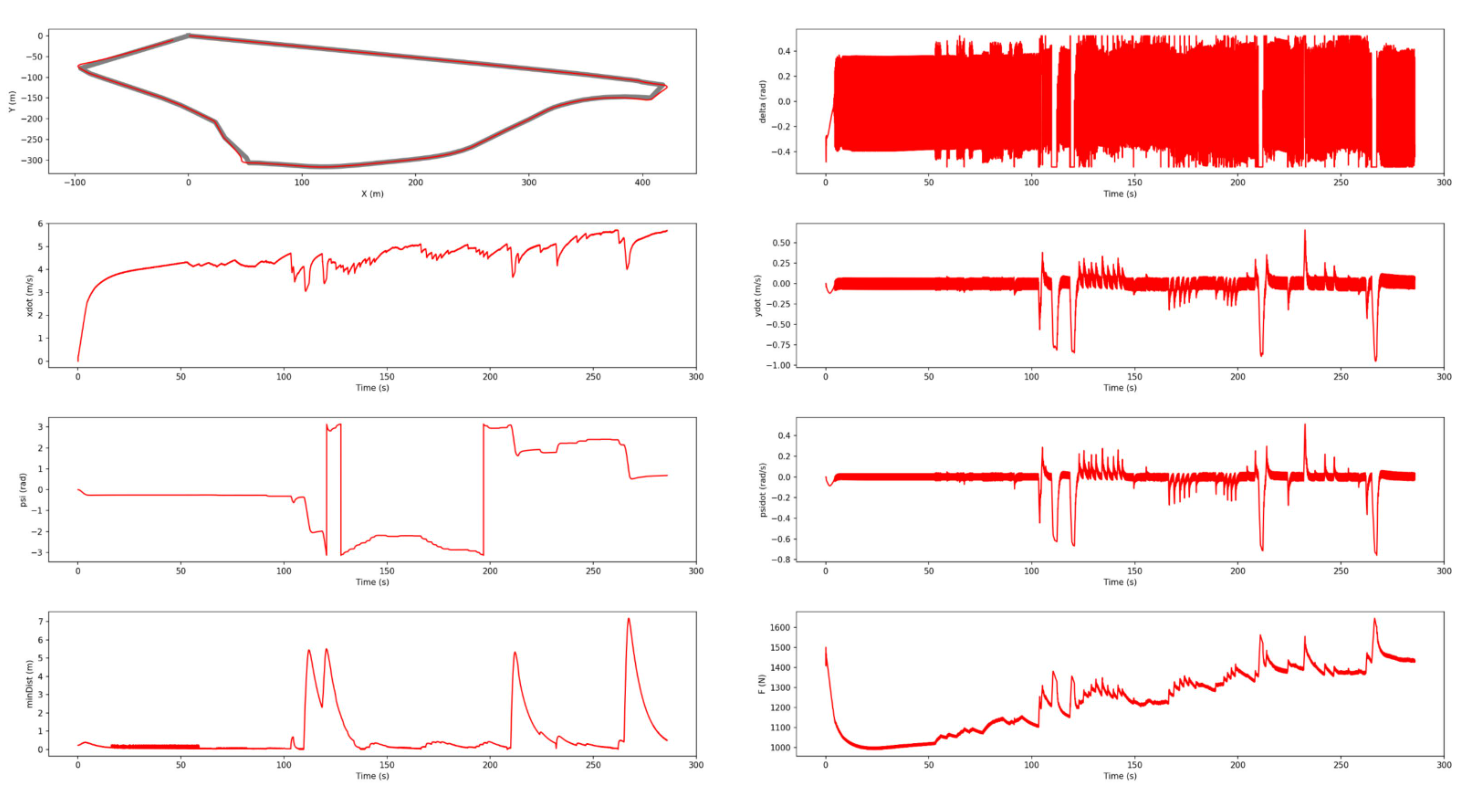

This project involved the implementation of a variety of efficient controls and estimators for a self-driving vehicle. Ultimately, I ended up implementing a PID controller, an LQR controller, MPC. Each controller was tested and graphed to compare. The MPC controller resulted in the fastest reflexive control, yielding in a completion of the track in under 128 seconds.

Skills: Linearization, PID, Controllability, Stabilizability, LQR, MPC, A Star, EKF SLAM

I began by tuning the proportional, integral and derivative gains to optimize PID performance. Next, since longitudinal control varies the speed, I linearized the lateral control, or turning, using pole placement. I discretized my model and then implemented LQR, which after tuning achieved a speed of under 130 seconds, rewarding me with an additional 10 points extra credit for this project. I was able to achieve this speed by tuning how far ahead the vehicle looked, which gave the vehicle more time to react to the updated information.

I also implemented path planning algorithms and estimators, such as A Star and EKF SLAM. A Star was used to replan a trajectory when a slow moving car posed as an "obstacle" ahead. A Star allowed my vehicle to overtake the slower vehicle in the straight. EKF SLAM was used as an estimator to determine the rough position and heading of the vehicle.

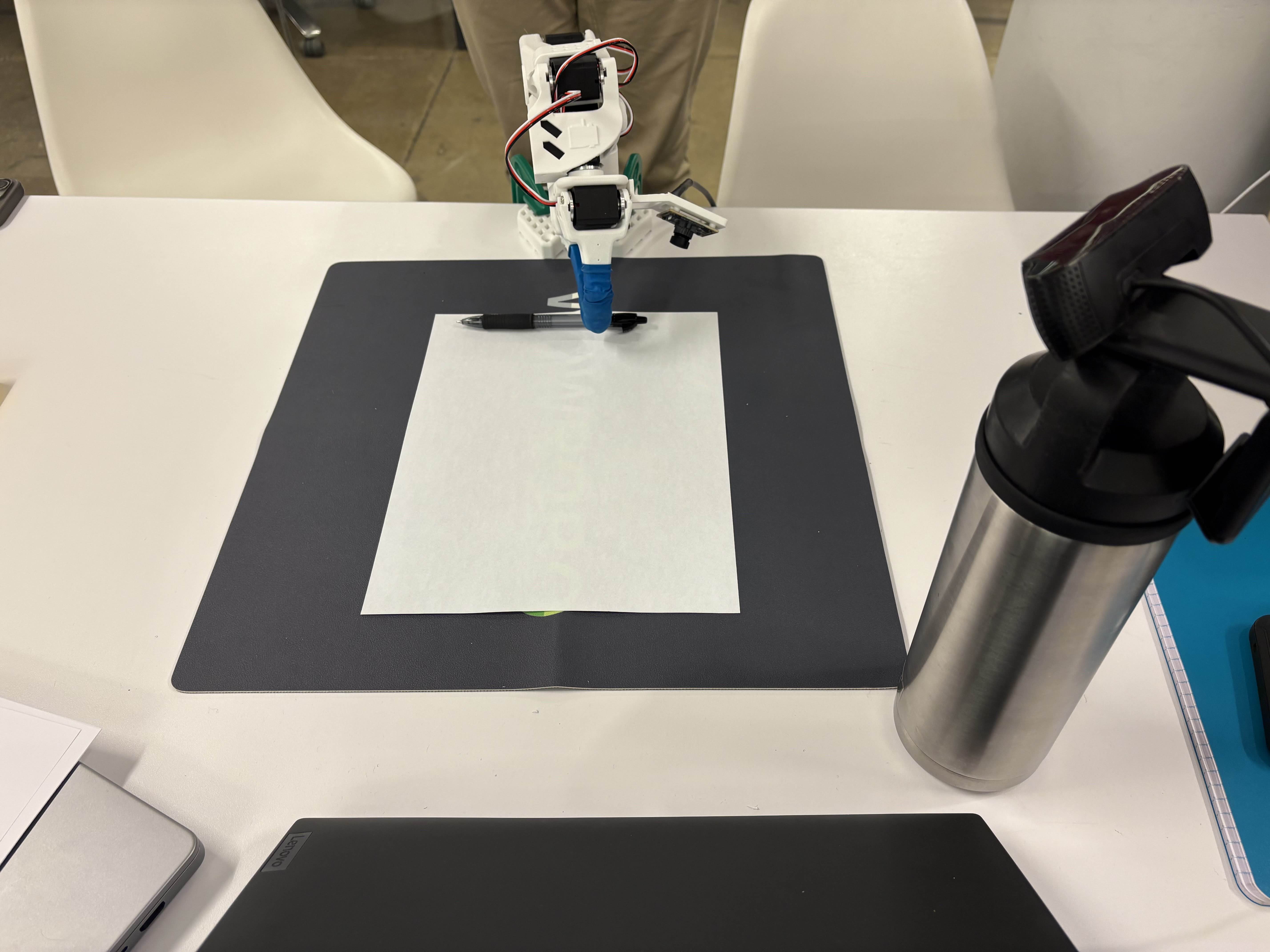

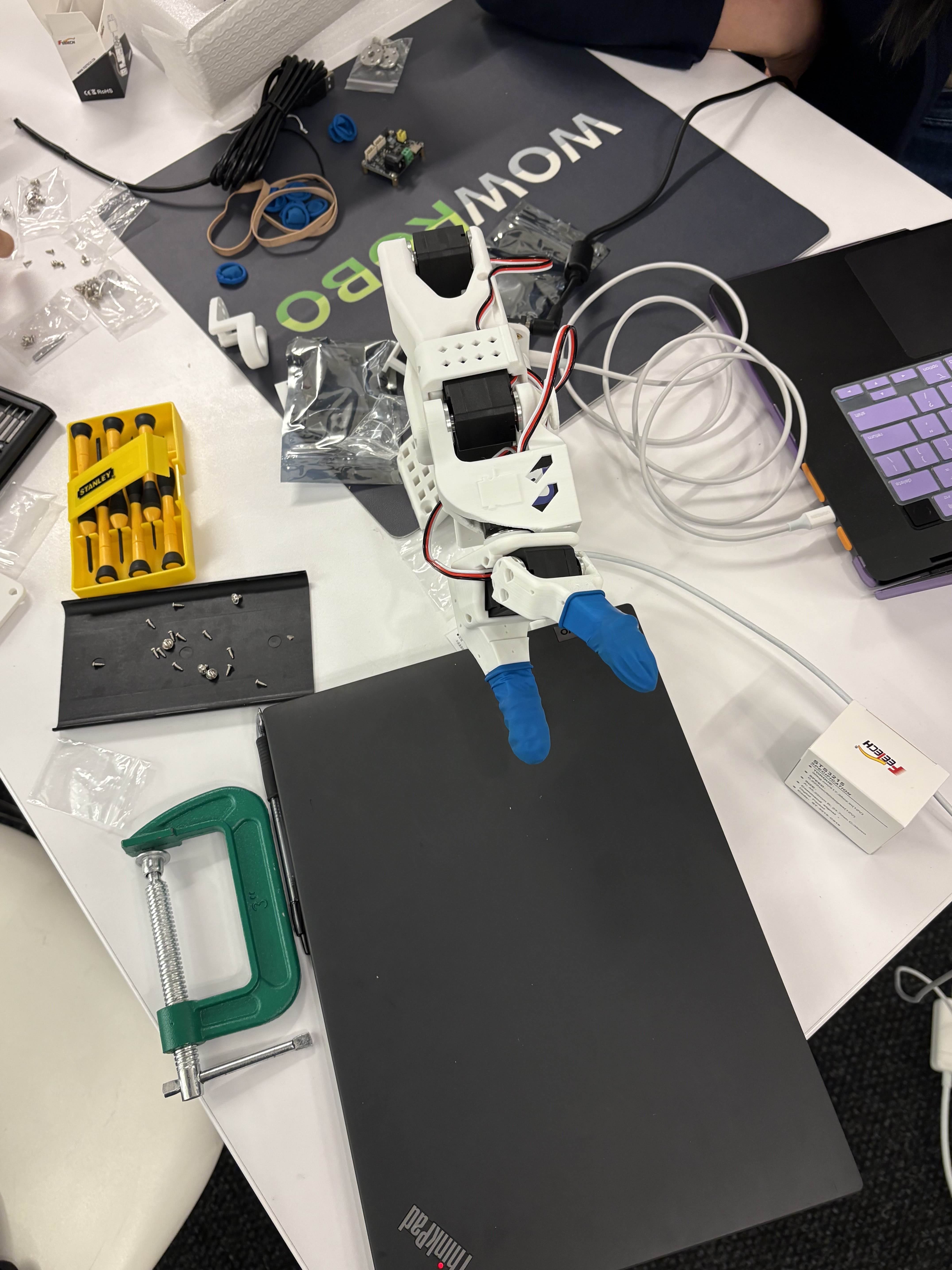

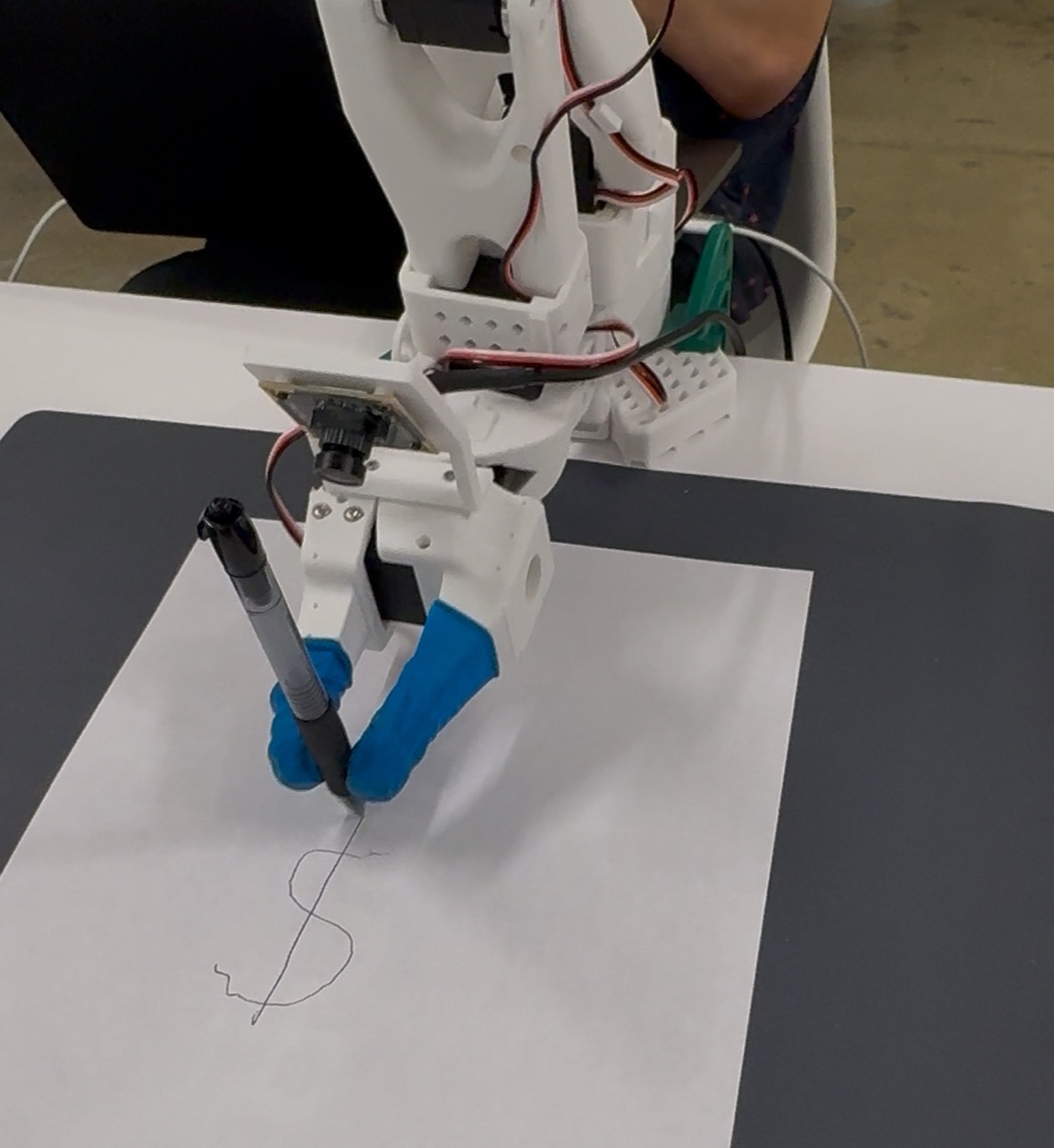

LeRobot Arm Hackathon NYC - SO-100 Robot

Constructed a SO-100 robot arm, calibrated to be in line with the leader arm configuration. Trained using teleoperation, then filters were added to reduce joint trembling and increase speed. SO-100 was able to autonomously pick up a pen within the reachable workspace, draw a dollar sign, and put the pen back down. Techniques like frame skipping were experimented to double the task speed.

Skills: Calibration, Reinforcement Learning, ACT Policy, Signal Processing, Vision Feedback

Links: Devpost Link

Team was given all components to construct the SO-100 robot arm, including motors, controllers, 3D printed components, and cameras. We spent the first 4-5 hours formulating a plan for what to do with the robot, construction, and calibration of the robot. During training, we noticed lots of noise in the trained policy which prevented the pen from being picked up.

Using the curves obtained from the leader and follower configuration, we were able to subtract the two to isolate most of the electrical noise in the system. Using a low pass filter, this noise was greatly reduced, and using "frame skipping" where we fed every other frame as a command to the robot doubled its speed. My team won the $1,000 prize for the "Most Innovative Use of Data".

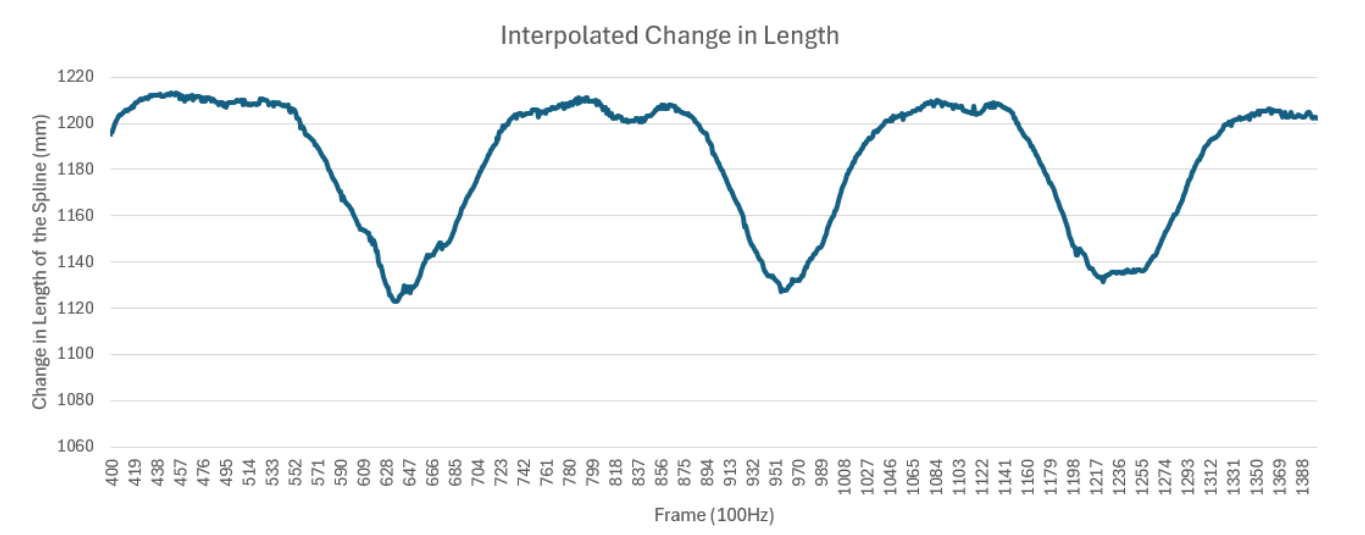

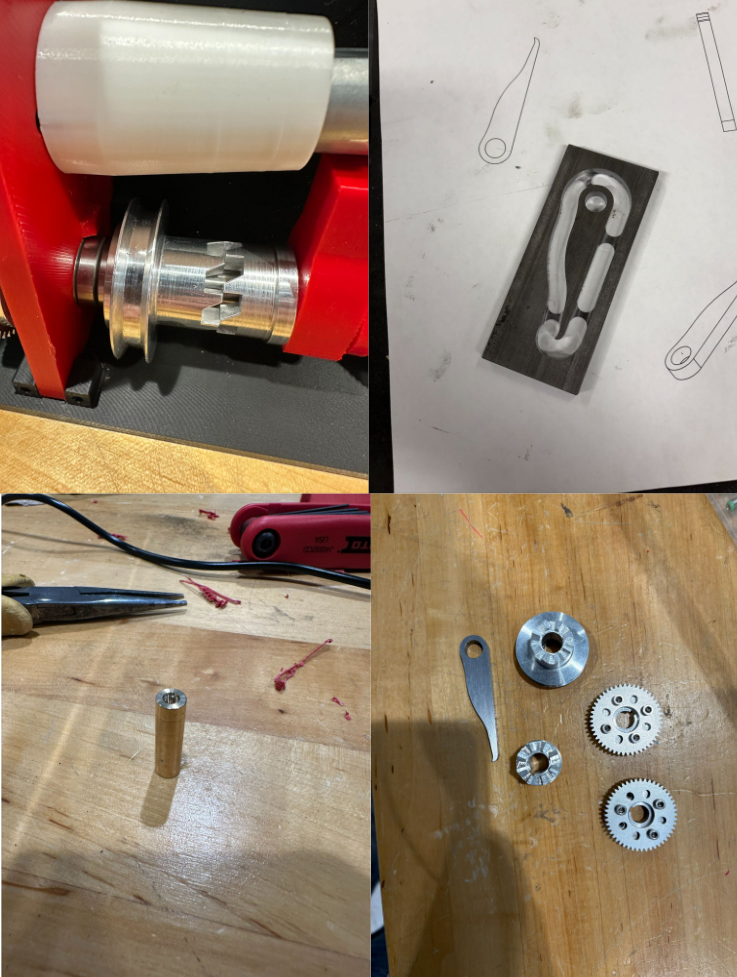

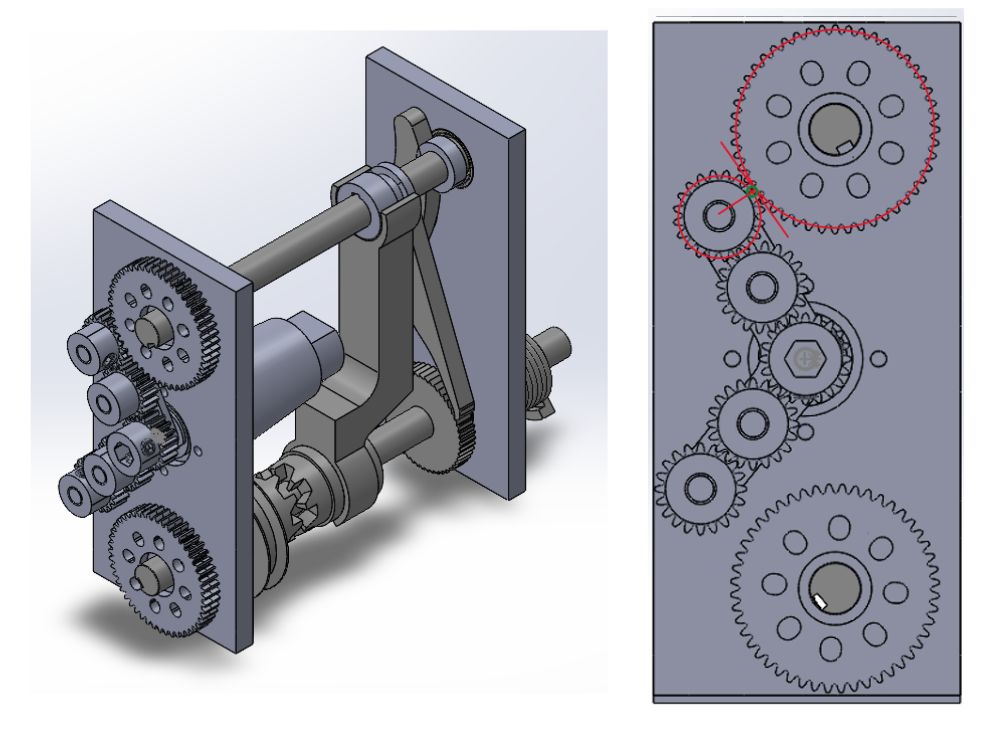

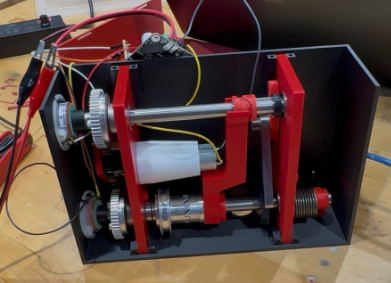

SESMA 3.0 - Soft Exosuit for patients with Spinal Muscular Atrophy (Capstone)

This project was an exernal device aimed at assisting type III SMA patients with sit to stand transitions. This device autonomously detected when the user wanted to stand, actuated a spring tied to a spool, and a cable routed down the wearers' leg pulled them up. Achieved 10% assistance, which beat out the 3-4% of the previous iteration's design with a great reduction in size. Total volume decreased by 17%, width decreased 28%. Final prototype was sturdier and relocated to the sides, which was more realistic for a wearer (They can now lean back in their chair).

Skills: Mechatronics, 3D splines, CAD, SolidWorks

The challenge with this project was the use of a single motor to actuate both the charging mechanism for the spring and the turning of a cam to change the state. This was done using a geared switching mechanism on the motor to switch between the camshaft and the powershaft. Two leads were used to reduce the distance needed to travel from one shaft to the other.

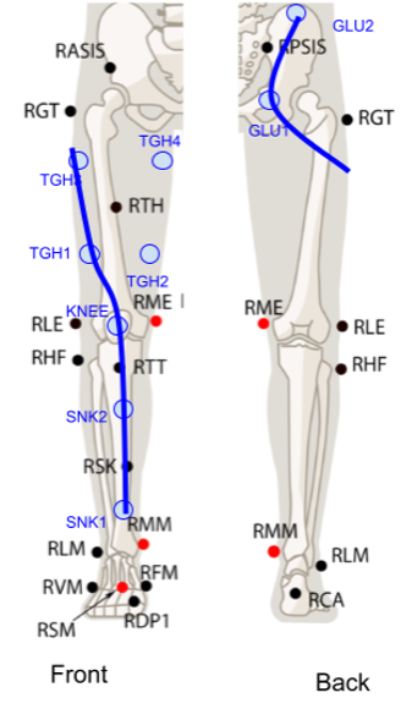

To autonomously detect a STS transition, we ran testing using a motion capture setup with cameras and markers routed in the same areas as a cable would be routed down a patient's leg. I took these points in 3D space and created a 3D spline through them, which interpolated between the points. These splines were discretized and summed to give a good approximation as to how much the length of the cable changed from sitting to standing. We then compared this data to data found in a paper that determined the point of maximum knee torque, and actuated the mechanism at that point.

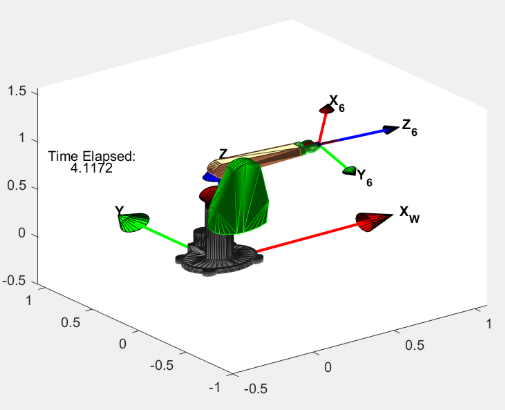

Robotic Arm Manipulation: Resolved Rates, Gradient to Compute Min Norm

This project is a combination of assignments from a graduate course at Stevens, ME 650. This course covered optimization and mathematical design techniques for control of serial manipulators. In this course, I used various techniques to control manipulators in different contexts.

Skills: Resolved Rates, Null Space Projection, Jacobian, Cost Functions, MATLAB

The first project shows a resolved rates simulation, which were implemented for link speed optimization. Inverse kinematics via a closed loop resolved rates algorithm enabled the robot to adhere to speed constraints (1mm tolerance translation, 0.0524 radians rotational). These results were simualted via 1st order interpolation in MATLAB.

My second project involved using both the gradient of a curve and the Jacobian projected into the null space to allow the end effector to maintain orthogonality to a surface. Robot links and movement were simulated in MATLAB with a minimum norm pseudoinverse redundancy resolution algorithm. Maintaining this orthogonality to a surface is highly applicable in welding or writing applications.

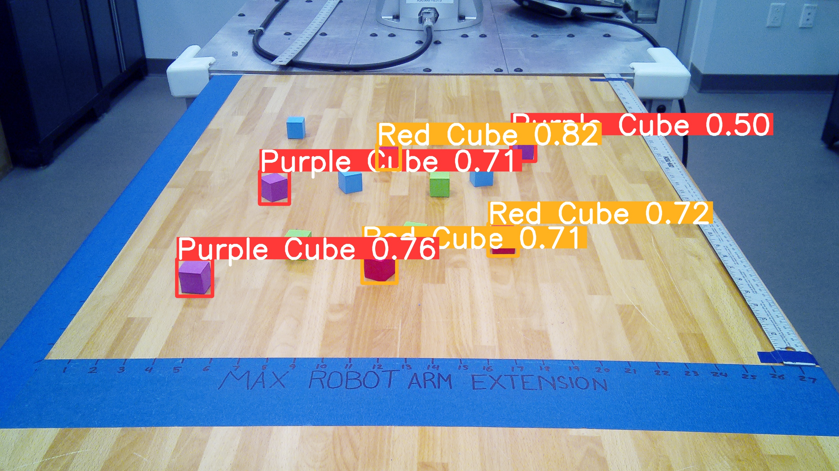

Camera Vision - Doosan H2515 Pick and Place Algorithm

Collected, annotated, and trained a YOLOv8 model to identify the pixel location and color class of cubes. Pixel coordinates were converted directly into a numerical inverse kinematic solution for arm navigation. Function was then used in path planning algorithms, which was communicated via a client-server connection, emulating TCP protocols for the robot arm to execute.

Skills: OpenCV, YOLOv8, Python, Pick and Place

Data was collected using a webcam overlooking foam cubes scattered across the workspace. These images were annotated in Roboflow, then exported and trained using YOLOv8. Since the dataset was small, there was a lower confidence in colors close to the table, such as orange or yellow. Colors with higher contrast were identifiable with a higher confidence and were used instead. The center of the bounding box was found using OpenCV, and the coordinates were parameterized and scaled to fit the workspace. The joint angles were also interpolated, which worked for this case but for other cases resolved rates algorithm would be more generalizable.

Once joint angles were computed, the robot was sent these joint commands using the Doosan Robot Language, a Python-based framework from Doosan. I used the sockets library in Python to establish a client-server connection, which sent the robot commands to the robot to operate in the workspace.

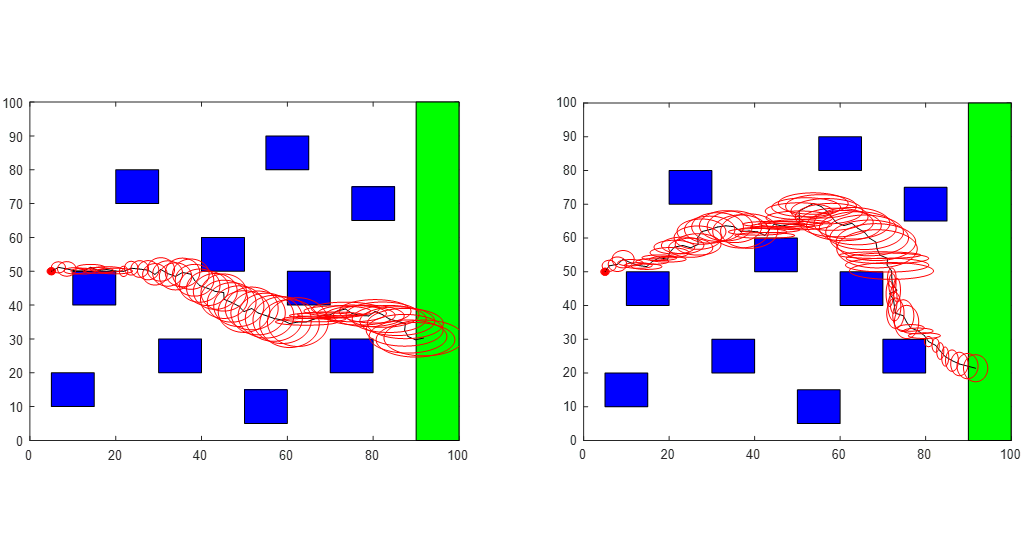

SLAM and RRT Implementation for 2D Mobile Robot with Obstacle Avoidance

Rapidly-exploring Random Trees were used to plan a collision free path by sampling and exploring the environment from a start to a goal state. SLAM was then used to estimate and visualize the uncertainty along the robot's trajectory. Known obstacles were modeled as landmarks to reduce this uncertainty. The robot's confidence along the path decreased when it didnt see an obstacle, and once localized the confidence increased.

Skills: RRT, SLAM, Kalman Filter, MATLAB

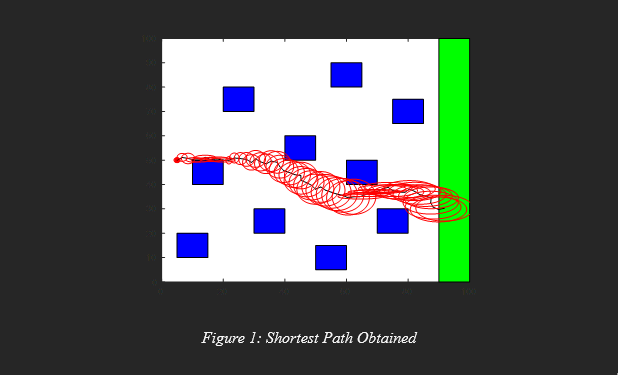

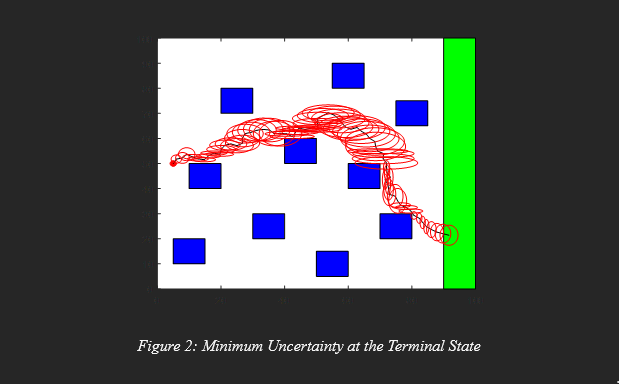

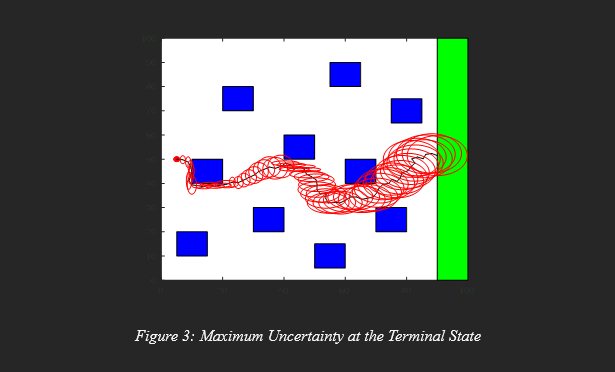

I learned how sampling-based motion planning and state estimation can be used for reliable navigation. RRT was iteratively used to find paths, and SLAM provided a framework for estimating the error. Obstacle locations were known, so they were useful as landmarks and sensor measurements.

The RRT algorithm generated 100 trajectories, and the final plots represent the most extreme cases, which includes the shortest path and the minimum and maximum uncertainty at the goal state. The Kalman filter calculated the uncertainty at each time step, which was visualized in MATLAB.

Hand Squeezing Device for Grip Strength Rehabilitation

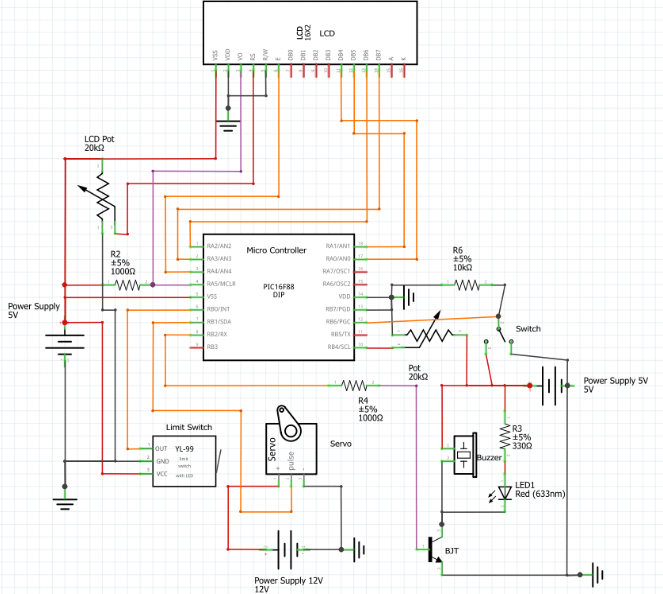

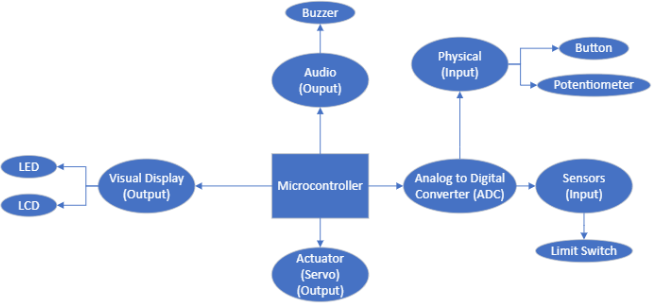

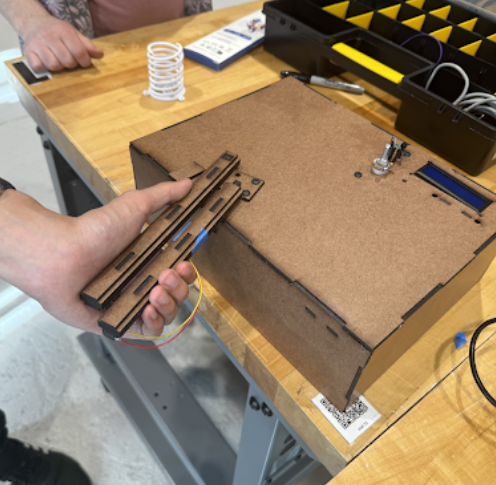

This project is a culmination of a graduate mechatronics course taught at Stevens, ME 522. The knob on top of the box controls the "squeezing strength", which is outputted by the LCD screen. The resulting force on the grippers is changed using a fourbar linkage to slide a spring. A limit switch on the handle counts the repetitions.

Skills: Mechatronics, PICBasic Pro, PIC16f88 Microcontroller

This device was designed to alleviate joint pain and rebuild hand/forearm strength. Applications could be in PT clinics or doctors offices to test and monitor grip strength and document effectiveness. The device used a PIC16F88 microcontroller, programmed in PICBasic Pro. Polling used to detect when the limit switch was triggered, which activates a buzzer and a rep count is displayed on an LCD. A challenge faced was coding the force; "ADCIN" was needed instead of the "Pot" function to read the potentiometer output and translate the force.

The force was translated through a fourbar linkage and a spring. The fourbar linkage stretched the spring, which due to an extremely limited budget was a rubber band. For future implementations with a real budget, a better spring with more variation would be used for more practical application.

Robot End Effector for Pick and Place

Prof. Kishore Pochiraju purchased a Doosan H2515 6 DoF arm for the PROOF Lab, which needed an end effector for pick and place. A gripper end effector was designed and 3D printed for the PROOF Lab to open and close its jaws, with a PWM signal from the Arduino sent to a motor controller to actuate the jaws. The Arduino was bcontrolled by a digital IO on the robot flange, which allowed for a direct connection with the robot.

Skills: SolidWorks, Mechatronics, 3D Printing

The robot arm came with a digital IO on the flange which outputted 24V. I stepped down this voltage using a voltage divider circuit to 5V, which an Arduino then received. The Arduino then sent a PWM signal to a motor controller, which actauted the jaws. A gear and hinging mechanism was used to open and close the jaws.